10 Facts You Should Know About Computer Programming

To learn more about Coder Kids and our online coding, gaming, and tech classes, click here.

Modern technology is such a normal part of our everyday lives that we can easily take these conveniences for granted. Whether it’s a phone, computer, or a simple machine, everything around us is programmed to work a certain way and complete different tasks.

If you’re interested in knowing more about computer programming, you’re in for a treat! Today, we’ll talk about 10 facts about computer programming that’ll blow you away!

Here are the 10 facts for you - scroll down to read more detail about each one.

10 Facts about Computer Programming

The first computer programmer was a female mathematician

Many machines did simple math, but Charles Babbage’s Analytical Machine was the first computer we consider “programmable”

The first person to use the term “bug” was Thomas Edison - and it was a real bug!

The first computer virus was not meant to be harmful.

The first computer game didn’t make any money.

Computer programming played an important role in ending World War II.

It took less code to send a man to space than to run a smartphone!

There are currently over 700 computer languages.

The “C” programming language had a predecessor called “B”.

Some projects require using multiple programming languages.

Without further ado, let’s get started!

1. The First Computer Programmer Was a Female Mathematician

Ada Lovelace was a brilliant British mathematician and the daughter of the English poet Lord Byron. She’s also considered to be the first-ever computer programmer in history.

Ada Lovelace

She was born on December 10, 1815. Ada excelled in mathematics and was interested in numbers and fascinated by number theories. Later in life, Ada began working with Charles Babbage, one of the most popular mechanical engineers of the 19th century. He also created one of the earliest models of mechanical computers, and was called the Difference Engine. This machine is also considered one of the cornerstones for modern calculators.

Ada became an essential factor in Babbage’s work and became one of his most experienced apprentices. She also translated the works of an Italian engineer, who later became the Prime Minister of Italy, Luigi Federico Menabrea.

Her Notes on Luigi’s Article

One of Menabrea’s articles included a model of an Analytical Engine. In addition to translation, Lovelace annotated the articles with her own notes. However, her notes were three times as long as Menabrea’s original article. She published it in 1843 via an English journal.

Ada wrote about the possibility of programming a machine with a code to calculate Bernoulli numbers. This code is considered to be the first algorithm carried out by a machine, making it the first computer program. Consequently, this makes her the first computer programmer. She also predicted that computers would do more than calculations.

Sadly, Ada Lovelace died in 1852 at the early age of 36. However, her legacy still remains to this day. Every year, on the second Tuesday of October, Ada Lovelace Day is celebrated.

2. The Earliest Programmable Computer

There are many machines that were designed to do a specific task. For example, Gottfried Wilhelm Leibniz, a German polymath, invented the stepped reckoner. This was the fancy name given to the first calculator capable of doing the four basic math functions. Despite being a computer, it wasn’t programmable.

In the early 19th century, Charles Babbage invented the Analytical Engine. It was a machine as large as a small house. It also used 6 steam engines to run. This was the earliest form of a programmable computer.

The Analytical Engine

The huge device consisted of 4 main compartments. The first and most important one was called “the mill”, which is the equivalent of the CPU. There was also the “reader” and “printer” parts, which are the parts responsible for input and output. Finally, there was the “store” compartment, which is the computer’s storage and memory unit.

Babbage also used 3 different punch cards to program the huge machine by using card reading technology. These cards were “variable cards”, “number cards”, and “operational cards”.

The Idea Behind the Analytical Engine

The idea of using a punch card was inspired by a jacquard loom that was invented in 1810. This machine was used to design fabrics by using wooden punch cards.

The use of these instruction cards made the Analytical Engine a programmable device. In fact, it was far more flexible than any other machine then in existence. This was the machine were Lovelace’s algorithm would be implemented.

Unfortunately, Babbage had a lot of conflicts with the project’s chief engineer. As a result, he was never able to finish the machine’s final design. Ada’s algorithm was never tested.

3. The First Computer Bug Was Actually a Dead Bug

A computer bug is defined as an error or fault due to a flaw in the computer program or system. This bug can cause the program to produce an incorrect result, or behave unexpectedly.

The first person to use the word “bug” to describe a technological error was Thomas Edison.

He used it in a letter that dates back to 1878. However, it wasn’t until 70 years later that the term became so popular.

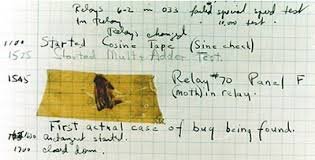

At 3:45 p.m, September 9, 1947, the first actual incident of a computer bug was recorded. It was recorded by Grace Hopper, an American computer scientist, and member of the U.S. Navy. She was working on a Harvard Mark II computer and recorded the bug in the computer’s logbook.

After she noticed a hindrance in the computer’s operation, she started tracing the problem. Hopper found a dead moth that was stuck between the relay contacts of the computer. She removed the moth and taped it into the Mark II’s Log. Beneath the moth, she wrote “First actual case of bug being found.” Although there are some claims that other people found the moth in the computer, Hopper remains the one who did the logbook entry.

4. The First Computer Virus Wasn’t Meant to Be Harmful

Despite the popular sci-fi cliche, where technologies end up going rogue and turn against humans, this isn’t the case in real life.

A computer virus is in an infective computer program. Once a virus is executed in a way, it starts replicating itself by invading other programs to insert its own code. When the replication process is successful, the affected computer is then called infected with a computer virus.

The reason for calling it a virus is that it closely resembles the biological virus. A real virus also invades living cells and inserts its genome (genetic code), so it can replicate itself.

The first person to work on the theory of a self-replicating program was John von Neumann in 1949. However, it was Fred Cohen who called it a “computer virus”.

Fred Cohen’s Virus

Frederick B. Cohen is a famous American computer scientist. The reason he is famous isn’t only because he made a virus, but because he also invented virus defensive techniques.

In 1983, he designed a parasitic computer program that has the ability to infect other computers through storage disks. This was considered the first virus. Once an infected floppy disk is inserted into a healthy computer, his virus would seize a computer. It was able to make copies of itself ready for infecting any other computer. However, the virus he designed was used as a test to prove the concept, so it didn’t cause any harm to the computers.

He also develeoped the concept of a “good virus” and called it “compression virus”. This virus was able to look for uninfected executable files. It then asks for the user’s permission to compress these files and attach itself to them.

5. The First Computer Game Didn’t Make Any Profits

Here’s a fact: Computer games now are so popular that the games industry generates a larger revenue than the movie industry! According to TechJury, the video game industry generated almost $135 billion in 2018. That’s a 10.9% increase in a single year when it reached $116 billion in 2017. Not only that, but it’s also forecasted that the gaming industry will reach the $180 billion checkpoint in 2021!

But did you ever know that what’s considered the first-ever program meant to be played as a game wasn’t even a success?!

Spacewar!

There were some earlier examples of computer games, such as “OXO” in 1952 and “Tennis for Two” in 1958. However, a lot of historians credit “Spacewar!” to be the first true digital computer game.

This game is the brainchild of a team of computer programmers from the Massachusetts Institute of Technology (MIT). Steve Russell, Martin Graetz, and Wayne Wiitanen conceived the idea in 1961.

It took the team about 200 total hours to write the first version of the program. The game was released in February 1962.

The Game’s Idea

The idea of Spacewar is simple. Using front panel switches, two players can control each of the two fighter spaceships. In the game, your mission is to destroy the opponent’s ship first.

The interesting part is that you also have to avoid the tiny dot at the center of the screen, which represents a star. The first to hit the star also dies. You can test out the game yourself online.

The team wrote the game’s code on a PDP-1, which is the earliest computer produced by Digital Equipment Corporation (DEC). The small, interactive computer used a cathode-ray tube display, which was innovative technology back then.

The game became extremely popular within the computer programming community. However, it failed to translate its potential into commercial success.

6. Computer Programming Played an Important role in Ending World War II

Alan Turing

Alan Turing, an English Mathematician, is recognized by many as the father of modern-day computer science. He founded the concepts of computations and algorithms with his inventions. However, what many people might not know is that Turing played a pivotal role in bringing World War II to an end.

The Nazis used an encrypted machine called ENIGMA that was difficult to interpret. However, Alan used his cryptologic and algorithmic skills to decipher the machine. This helped the Allies to win the war and saved countless lives.

In fact, the Association for Computing Machinery (ACM) named its award after him. The Turing Award is given to individuals as a recognition of their contributions to the world of computer programming.

7. It Took Less Code to Send a Man to Space Than to Run a SmartPhone

When NASA’s reusable Space Shuttle first went into space in the 70s it had less code than the phones in our pockets!

Surprisingly, NASA still uses computer programs from the 70s in their spacecraft. It would be extremely costly for NASA to write new codes and design new computer programs. Additionally, designing a new computer program requires a lot of testing and can carry risks of failure.The old-fashioned technology that NASA is currently using still works up to this day, so there’s no need to replace it.

8. Computer Programming Language Diversity

There are many countries that speak more than one language. According to VistaWide, Papua New Guinea is the country with the highest number of languages (820), accounting for 11.86% of the world’s languages. Indonesia comes as a close second with 742 languages, and Nigeria with more than 500.

Now imagine there’s a country where the people only speak in computer languages. If that happened, it would, at the very least, knock Nigeria off the third place!

In fact, Wikipedia currently lists over 700 programming languages. However, this list doesn’t feature some languages, like Markup languages (an example is HTML.) There are also other lists, such as FOLDOC, that includes more than 1,000 languages.

9. The Famous Programming Language “C” Is Named So Because It Has a Predecessor Called “B”

The Programming Language “C” is one of the most popular languages in the history of computer science.

Dennis Ritchie, 2011

In fact, a lot of modern programming languages are based mainly on “C”. for example, C++, C*, Perl, and Java.

However, there was a predecessor language called “B”. the language was created and written in 1969 by Ken Thompson, a Turing Award-winning computer scientist.

The “B” stands for “Byte” or “Bell”, as he worked in Bell Laboratories for most of his career. “B” was later improved by Dennis Ritchie to create “C”.

10. Depending on the Project, You Might Use a Variety of Languages

Particularly when building a website, there are three programming languages used at the same time - HTML, CSS, and Javascript. HTML builds the main structure of the page, CSS is in charge of the design elements of a page, and Javascript controls anything that you can click or that moves on the page. The three languages work together to make the website work. As a matter of fact, there are a number of other languages that work in tandem to help websites work as well, like PHP and mySQL among many others.

However, for most projects, you will stick to one language. The great thing is that each language you learn helps you to to learn the next one. They all work on the foundation of logic - even if the syntax changes from language to language.

Wrap Up

There you have it! 10 interesting facts about computer programming that you might not have known, but now you do!

If you liked the article, make sure that you share these cool facts on your social media accounts!

As an Amazon Associate, Coder Kids earns from qualifying purchases.